Microsoft Copilot isn’t just another AI tool; it’s a comprehensive platform designed to be your indispensable companion, enhancing productivity, fostering creativity, and facilitating information comprehension all through a user-friendly chat interface. The concept of Copilot emerged two years ago when Microsoft introduced GitHub Copilot, aiming to assist developers in writing code interactively. Microsoft then expanded its scope, integrating it with other services like Microsoft Office 365, Dynamics 365, Power BI, Windows, and

Let’s explore the complexities of Copilot further, gaining insight into its diverse layers and the robust ecosystem of plugins that enhance its capabilities:

The Plugin Ecosystem: Enhancing Copilot’s Capabilities

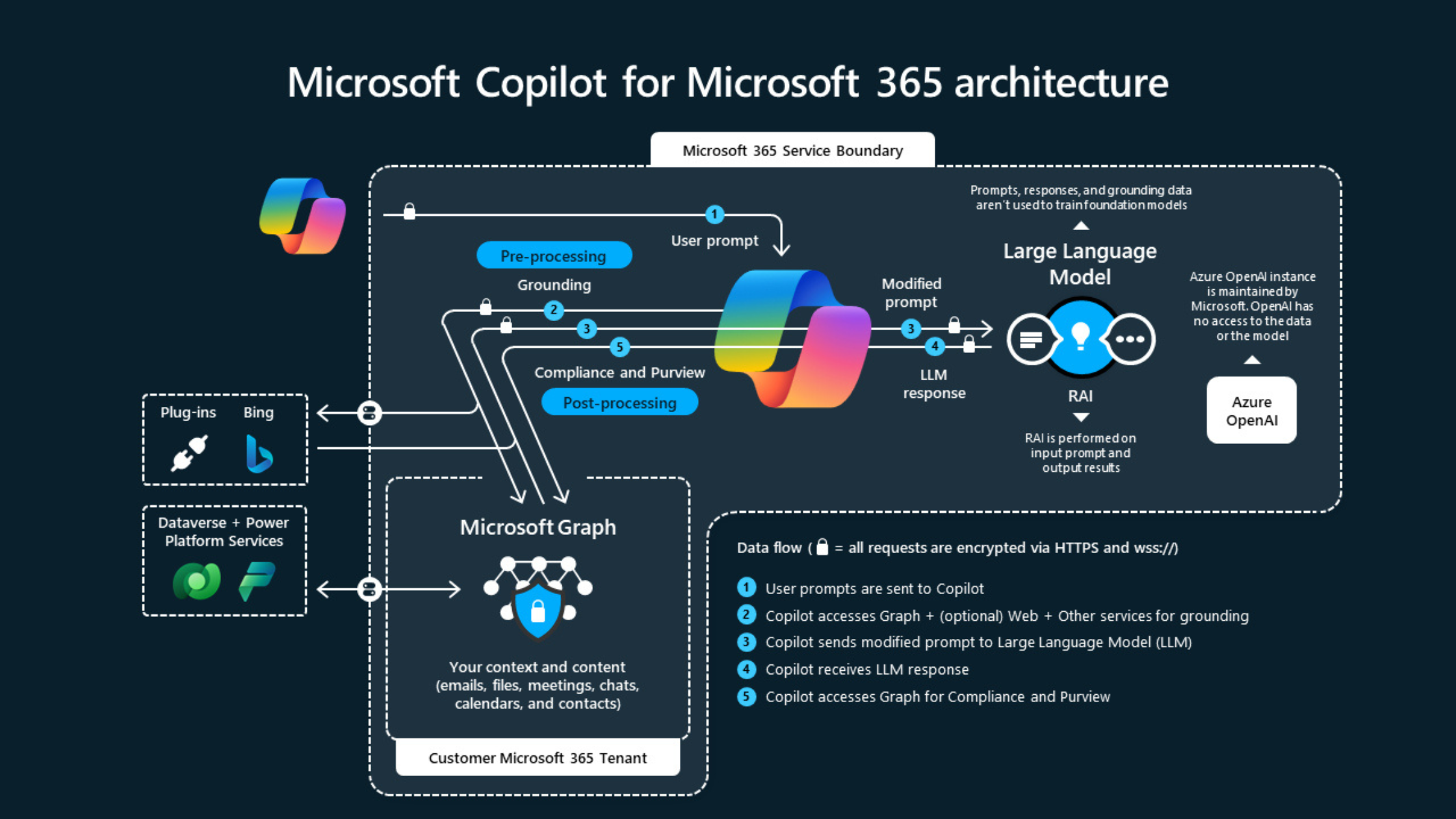

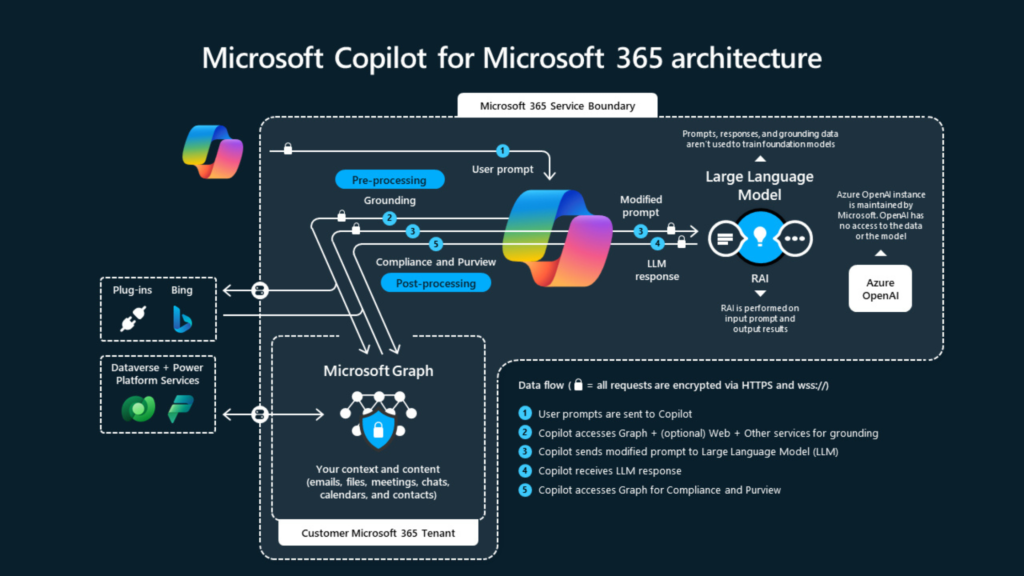

Think of Copilot as a versatile toolkit, with plugins serving as specialized tools that enhance its functionality. These plugins enable Copilot to perform various tasks, frommaking reservations at your favorite restaurant to securely accessing proprietary company data. They empower Copilot to tap into external resources like Bing and Power Platform, broadening its scope and utility. Copilot’s Language Learning Models (LLMs) are trained on publicly available data. When extending Copilot for a specific company or organization, access to that organization’s system is necessary. Plugins act as bridges between large language models and websites or backend systems.

Understanding Copilot’s Architecture: A Multi-layered Approach

1. Application Level: Where Interactions Take Place

At the application level, the interface of Copilot is defined, where end-users interact with it. Here, users can engage in conversations, assign tasks, or seek assistance within a familiar chat environment. This simple Chatbot

interface processes user input using generative AI models. With Microsoft Copilot, users can also enable publicly available plugins at the application level.

2. AI Orchestration: The Cognitive Core

The AI orchestration layer serves as the heart of Copilot. It interprets user intents from input, considering the conversation history between the user and copilot. After extracting intents, it orchestrates actions based on inferred intents, responsible for decision-making and task sequencing to fulfill user requests effectively.

Microsoft have launched the developer took kit for the development of orchestration layer name semantic kernel.

https://learn.microsoft.com/en-us/semantic-kernel/overview/

3. Foundation and Infrastructure Level: The Backbone of Copilot

At this level, Copilot’s technical framework is established, selecting optimal AI models, and managing data storage and retrieval mechanisms. Meta prompts are set to define the role and behaviour of Copilots, restricting and limiting responses from GPT models to relevant ones. This level also houses Copilot’s memory, crucial for

retaining conversation history and facilitating context-aware interactions.

AI model can be select through Azure Open AI services, it offers different generative AI models. Most commonly GPT 3.5 Turbo and GPT 4 I used for the copilots.

Extending Copilot’s Reach: Customization Options

Microsoft offers various options for tailoring Copilot to meet specific needs and preferences:

Pre-made Plugins:

For quick enhancements, pre-made plugins provide ready-made solutions. Those plugins can be consumed in Microsoft Copilot by enabling in the edge browser by sign-in with Microsoft account in the sidebar under the plugin option.

Custom Plugin Development:

Unique requirements can be met through custom plugins tailored to specific use cases and data sources. Custom plugins are developed by using the OpenAI schema. Plugins are considered as API which publish through OpenAI schema.

Microsoft Copilot Studio:

Microsoft has created a powerful tool based on low code no code concept to create a custom AI powered copilot. This is an intuitive tool for creating personalized Copilot experiences, allowing users to define behaviours, prompts, and integrations effortlessly. These copilots can be developed without need of developers.

Azure OpenAI Services:

Leveraging Azure’s infrastructure, users can deploy copilot with custom datasets, enhancing its understanding and responsiveness. Azure OpenAI services offers variety of generative AI models and plug n play method to deploy chatbot on Microsoft Copilot Studio.

DIY Development:

Microsoft have published a GitHub project to develop the custom copilot. By leveraging the Github project developers can develop a customize copilot. This project is implemented based using Semantic Kernal Framework. https://github.com/microsoft/chat-copilot

Microsoft’s Copilot goes beyond the usual limits of AI help. It’s like a flexible and adaptable friend that fits perfectly into different tasks and areas of work. Whether it’s making daily tasks easier, sparking new ideas, or helping understand things better, Copilot is ready to support and guide you through everything.